In the two years since Ben Eisen and I proposed in these pages a set of public management principles for an era of austerity (October 2010), federal and provincial budgets have indeed tightened. Subsequent contributors to Policy Options, particularly Kevin Lynch, have reiterated the need to improve productivity in Canada’s public institutions, which include our universities. At the same time, contributions by Heather Munroe-Blum, Ian Brodie and David Naylor have emphasized the importance of research excellence and the need to improve the international standing of our research universities. Could we design a magic policy bullet that would, in a fiscally prudent way, improve both the productivity of our university system and the reputation of our research universities?

Magic bullets are hard to design, but I suggest that a policy of introducing research performance funding as a component of provincial university operating grants could advance both these objectives, provided that the research performance measures are credible and transparent. I further suggest that Web-accessible databases now provide enough information on publication, citation and grant success to develop such measures.

Canada is unusual in its reluctance to differentiate research from teaching in its funding of higher education and to acknowledge that economies of scale and gains from specialization apply to universities in the same way that they apply to most human endeavours. Other countries have mechanisms that concentrate resources for research where they will be used most productively. In Canada, many provinces have avoided linking funding and research output. Almost unique in the world, Ontario provides its operating funding to support university teaching and research with the implicit assumption that all professors are equally capable of making a significant research contribution.

But anyone can see that some professors are better at research than others, in that they win more peer-reviewed grants and generate more publications that are cited by more scholars. Web-based tools can now reveal this to anyone who chooses to type the names of professors into a federal granting council Web site, into Google Scholar or into free on-line tools such as CitationGadget.

To illustrate, here is the distribution of scores on the h-index (an index that attempts to measure the impact of a published work) for 21 randomly selected associate professors of political science in three Ontario universities: 16, 14, 10, 10,9,9,7,6,6,5,5,5,4,4,4,2,2,2,1, 1, 0. Clearly, the majority of the research contribution in this sample is produced by a minority of the professors.

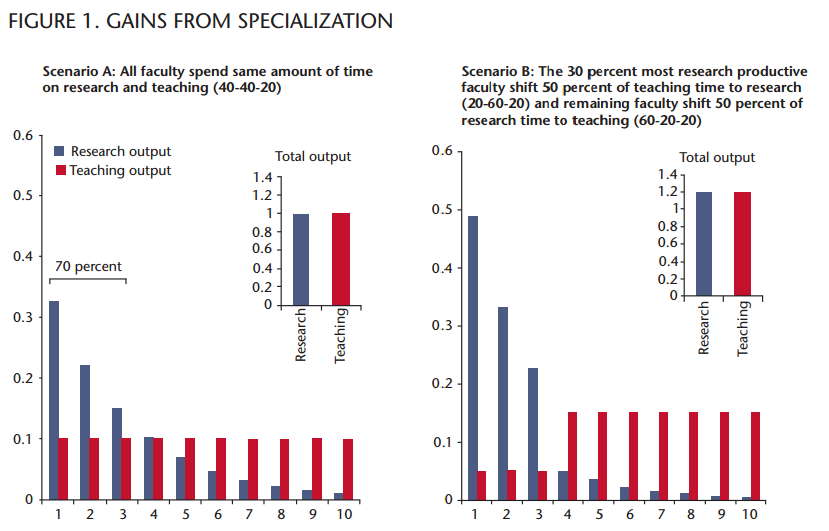

The potential gains from concentrating research resources, including faculty time, on the most productive researchers, can be illustrated in figure 1 below. Let us assume that research output follows a power law distribution such that 70 percent of the research of any population of professors is produced by 30 percent of the faculty. Because teaching performance is, as a general rule, uncorrelated with research productivity, we can assume that the teaching output of each research performance decile is the same.

In scenario A, all faculty spend the same amount of time on research and teaching following Ontario’s standard 40-40-20 split between teaching, research and service. In scenario B, the 30 percent most research-productive faculty shift half of their teaching time to research, resulting in a 20-60-20 workload, and the remaining faculty shift half of their research time to teaching, resulting in a 60-20-20 workload. By comparing the total outputs in the two scenarios, we see that the specialization scenario delivers 20 percent more research and 20 percent more teaching than the uniform workload scenario.

This is, of course, only a stylized model. Are such gains found in real systems that enforce specialization and differentiation? A comparison of cost and performance metrics in the Ontario and California public university systems suggests they are.

The size of California’s public university system is closer to Ontario’s than one might think. Although California has 2.8 times Ontario’s population, community colleges provide the first two years of baccalaureate education for many of its university students, and there are many private universities. As a result, the public university system — the combination of University of California (UC) and California State University (CSU) — is less than one third larger than Ontario’s system of 20 universities in terms of enrolment and state grant. Annual state contribution to university revenue is nearly the same per student (US$7,861 compared with $7,703 in Ontario). The grant per student at UC is 30 percent higher than in Ontario and that for CSU is 18 percent lower. The tuition contribution is 49 percent higher in the combined California system (US$8,422 per student compared with $5,665). The total of grant and tuition per student in the combined California system is 22 percent higher than in Ontario.

In California, a smaller share of the total academic salary budget is devoted to tenure-track faculty (57 percent in UC, 71 percent in Ontario). Average salaries of tenure-track faculty in California are 85 percent of those in Ontario. Tenure-track salaries take 23 percent of the total grant and tuition revenue in California, compared with 37 percent in Ontario.

In California, students receive considerably more teaching from fulltime faculty. There are 26 percent more full-time faculty per student and more faculty whose main job is teaching. In addition to 8,452 full-time « ladder rank » professors, UC employs 999 full-time « lecturers, » and CSU employs 1,827 full-time lecturers in addition to its 9,502 full-time tenure-track faculty. Across the two systems, full-time faculty teach 32 percent more courses on average than in Ontario. Combining this result with slightly better faculty-to-student ratios, and including provision for the longer semesters, means that the average California student receives perhaps 55 percent more teaching from full-time faculty than her counterpart in Ontario.

It seems pretty clear that the California public university system is more productive than Ontario’s in terms of teaching. What about research?

In California, a much lower proportion of faculty is expected to devote the same time to research as to teaching. And yet California has five public universities — University of Berkeley and UC Los Angeles, San Diego, Santa Barbara and Davis — in the Times Higher Education top 40. Ontario has one. Professors in California’s public universities have earned 27 Nobel prizes since 1995. Ontario universities have not had a Nobel winner since John Polanyi in 1986. Few would challenge the conclusion that California’s highly differentiated university system produces substantially more research than Ontario’s.

Remarkably, the absolute cost of faculty time for research is greater in Ontario. Given the 40-40-20 split in faculty time between teaching, research and service, this can be taken to be 40 percent of the total spending on salaries and benefits for full-time faculty. This works out to $854 million for Ontario. In California, if one assumes a 40-40-20 split for the 8,452 tenure-track faculty at the 10 UC institutions and a 60-2020 split for the 9,502 tenure-track faculty at the 23 CSU institutions, the same calculation yields US$674 million. On a per-student basis the state of California spends only 60 percent as much for « faculty time available for research » as Ontario. Even if allowance is made for grant-funded « summer month » salary provided by federal granting councils, the total public cost in California is still below that in Ontario.

The policy conclusion is clear: Canada would get more research and more teaching for its tax and tuition dollars if, like California and most OECD countries, provincial governments applied funding mechanisms that encouraged the minority of faculty who are productive researchers to do more research and all other faculty to do more teaching.

Given the availability of Web-based information on individual research performance, it would be relatively easy for provincial governments to develop a measure to compare the total research contribution of all faculty in each institution and to allocate a portion of their annual university operating grant to each institution on the basis of each institution’s share of the province’s total university research contribution. The research contribution of each professor could be calculated from publication, citation and grant-success data normalized by field of study.

These calculations could be performed by a small research assessment unit using publicly available data from international bibliometric services and the national granting councils. In a minority of fields, such as fine arts, where creative contribution is not thought to be adequately captured by such indicators, the research assessment office could arrange for traditional peer panels to review publicly available CVs and portfolios.

The process proposed here obviates many of the criticisms directed at traditional research assessment exercises; for example, those conducted in the United Kingdom, Australia and New Zealand. Because the research assessment office would use readily available public information, its data-gathering costs would be low. A very attractive feature of the proposed process, relative to traditional research assessment exercises, is that it places no burden on universities to generate submissions and, after it is set up, requires relatively little cost to operate. Its methodology and results would be transparent.

Unlike research assessment exercises that operate every five to eight years, the proposed process would be conducted annually and there would not be dramatic fluctuations because an institution’s share of the research performance fund is based on a large number of data points and these will not change quickly. As for the initial adjustment, the new system could be phased in over a number of years. For example, in Ontario the government could increase the amount of the operating budget going to the research performance fund by $100 million per year until it reaches $750 million.

The proposed process need not impoverish the scholarly endeavours of faculty who are not active researchers. Research contribution is not the same as scholarly endeavour and the purpose of the proposed process is to compare research performance, not scholarly value. A professor can be active in scholarly activity and make important scholarly contributions in the classroom, the university and the community without making a substantial contribution to world knowledge. And the proposed process need not distort academic priorities, because it distributes resources at the institutional — not the individual — level. Provosts, deans and department chairs would continue to be free to exercise their judgment to hire and promote a scholar who they believe is a better researcher than the performance indicator might suggest.

If such a process were used to allocate up to one-quarter of the provincial operating grant it would soon lead to greater differentiation between institutions and, one hopes, to higher international research rankings for those that perform best. Those institutions with the best overall research contribution would be rewarded and could give their top researchers more time for research, and they could also hire additional researchers. Institutions would be encouraged to specialize among fields, since it is easier to attract and retain the highest-level researchers if they are working with other high-level researchers, and the top-level researchers generate much more from the performance fund than mid-level researchers. Institutions with lower than average research contributions would receive less from this fund.

Ideally, research performance funding would be accompanied by teaching performance funding, along the lines that David Trick, Richard VanLoon and I have suggested in Academic Reform: Policy Options for Improving the Quality and Cost Effectiveness of Undergraduate Education in Ontario. But even in the absence of a teaching performance fund, institutions receiving a lower share of the research performance fund would have a strong financial incentive to focus more on teaching — both by taking in more students to increase revenue and by increasing the average teaching load to lower costs.

This proposal is not a completely magic bullet. It would not produce productivity and reputational benefits without political effort and academic leadership. After all, those who do not see the operation of the research contribution assessment process as being in their interest are not expected to be impressed by the argument that it would be timely, more transparent and less costly than traditional evaluations used elsewhere. But if the process were to be designed by respected experts and seen by the general public to be transparent and performance-driven, then governments and academic leaders should find this proposal easier to implement than most other options I have seen to date for improving public-sector productivity in an era of austerity.

Photo: Shutterstock by Likoper