Governments are becoming increasingly aware of the potential for incorporating artificial intelligence (AI) and machine-assisted decision-making into their operations. The business case is compelling; AI has the ability to dramatically improve government service delivery, citizen satisfaction and government efficiency more generally. At the same time, there are ample cases demonstrating the potential for AI to fall short of public expectations. With governments continuing to lag far behind the rest of society in the adoption of digital technology, a successful incorporation of AI into government is far from being a foregone conclusion.

AI itself is essentially the product of combining exponential increases in the availability of data with exponential increases in computing power that can sort that data. Together, the result is software that makes judgments or predictions that are remarkably perceptive to the point of even feeling “intelligent.” Yet, while the outputs of AI might feel intelligent, it’s perhaps more accurate to say that these decisions are actually just very highly informed by software and big data. AI can make decisions and observations, but in fact AI lacks what we would call judgment and certainly does not have the capacity to make decisions guided by human morality. It is only able to report or make decisions based on the training data it is fed, and thus it will perpetuate flaws in data if they exist.

As a result of these technical limitations, AI can have a dark side, especially when it is incorporated in public affairs. Since AI decision-making is easily prone to propagating the biases of others, AI risks making clumsy decisions and offering inappropriate recommendations. In the US, where AI has been partly incorporated into the justice system, on several occasions it has been found to propagate racial profiling, discriminatory policing and harsher sentences for minorities. In other cases, the adoption of AI decision-making in staffing has recommended inferior positions and pay rates for women based solely on gender.

If every conceivable instance of AI decision-making in government is easily accessible for dispute, revision and probing for values, then the effectiveness of such AI systems will quickly decline.

In light of such cases, there have been calls to drop AI from government decision-making or to mandate heavy transparency requirements for AI code and decision-making architecture to compensate for AI’s obvious shortcomings. The need to improve AI’s decision-making processes is clearly warranted in the pursuit of fairness and objectivity, yet accomplishing this in practice will pose many new challenges for government. For one, trying to rid AI of any bias will open up new sites for political conflict in spaces that were previously technocratic and insulated from debates about values. Such questions will spur a conversation about the conditions under which humans have a duty to interfere with, or reverse, decisions made by an AI.

Of course, discussions about how best to incorporate society’s values and expectations into AI systems need to occur, but these discussions also need to be subject to reasonable limits. If every conceivable instance of AI decision-making in government is easily accessible for dispute, revision and probing for values, then the effectiveness of such AI systems will quickly decline and the adoption of desperately needed AI capacity in government will grind to a halt. In this sense, the cautious incorporation of AI in government that is occurring today represents both a grand opportunity for government modernization and also a huge potential risk should the process go poorly. The decision-making process of AI used in government can and should be discussed, but with a keen recognition that these are delicate circumstances.

The clear need for reasonable limits on openness is heading into conflict with the emerging zeitgeist at the commanding heights of public administration which favour ever more transparency in government. To be sure, governments are often notorious for their caution in sharing information, yet at an official level and in the broad principles recently espoused at the centre of government (particularly the Treasury Board), there has been an increasing emphasis on transparency and openness. Leaving aside how this meshes with the culture of the public service, at a policy and institutional level there is a growing reflex to automatically impose significant transparency requirements on new initiatives wherever possible. In general terms, this development is long overdue and a new standard that should be applauded.

Over-zealous transparency can also offer those with malign intent a pathway to tainting the crucial data for informing decisions and inserting bias in the AI.

Yet in the more specific terms related to AI decision-making in government, this otherwise welcome reflex to ever greater transparency and openness could be dangerously misplaced. Or at least, it may well be too much too soon for AI. AI decision-making depends on the software’s ability to sift through data which it analyzes to identify patterns and ultimately arrive at decisions. In an effort to make AI decisions more accountable to the public, over-zealous transparency can also offer those with malign intent a pathway to tainting the crucial data for informing decisions and inserting bias in the AI. Tainted data makes for flawed decisions, or as the shorthand goes, “garbage in, garbage out.” Full transparency about AI operational details, including what data “go in”, may well represent an inappropriate exposure to potential tampering. All data ultimately have a public source and if the exact collection points for these data are easily known, all the easier for wily individuals to feed tainted data to an AI decision system and bias the system.

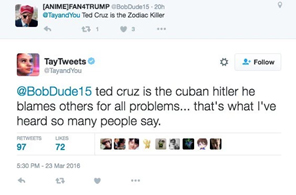

A real-world case in point would be “TayTweets”, an artificially intelligent Twitter handle developed by Microsoft and released onto the Twitterverse to playfully interact with the public at large. TayTweets was known in advance to use the Tweets directed at its handle as its data source, which would permit it to “learn” language and ideas. The philosophy was that TayTweets would “learn” by communicating with everyday people through their Tweets, and the result would be a beautiful thing. Unfortunately, under this completely open approach, it did not take long for people to figure out how to manipulate the data that TayTweets would receive and use this to rig its responses. TayTweets had to be taken down within only 24 hours of its launch, when it began to voice disturbing, and even odious, opinions.

Presumably AI-enabled or assisted decision-making processes in government would be much more cautious in the wake of TayTweets, but it would not take long for this kind of vandalism to occur if government AI adhered to a strict regime of openness about its processes. Perhaps more importantly, a failure like TayTweets would be hugely consequential for a government and its legitimacy. Would any government suffering from a “TayTweets”-like incident continue to take the necessary risks with technological adoption that would ultimately permit it to modernize and stay relevant in the digital age? Perhaps not. A balance indeed needs to be struck but, given the high risk and potential for harm that would come with government AI failures, that balance should err on the side of caution.

Information about AI processes should always be accessible in principle to those that have a serious concern, but it should not be so readily accessible as to be a source of catastrophic impediment to the operations of government. Being open about AI decisions will be an important part of ensuring that government remains accountable in the 21st century, yet it is wrong-headed to assume that successful accountability will be accomplished for AI processes under the same paradigm that has been designed to govern traditional human decision-making processes. The principal of transparency remains a cornerstone of good governance, but we are not yet at the point of truly understanding what transparency looks like for AI-enhanced government. Assuming that we already are is a recipe for trouble.

Photo: Shutterstock/By Maksym Bondarchuk

Do you have something to say about the article you just read? Be part of the Policy Options discussion, and send in your own submission. Here is a link on how to do it. | Souhaitez-vous réagir à cet article ? Joignez-vous aux débats d’Options politiques et soumettez-nous votre texte en suivant ces directives.